The State and Future of Remote Work

As noted in a recent article published by American City Business Journals, the state and future of remote work are still up for debate. Remote work and hybrid work arrangements continue to face resistance. Our reduced need for office space still impacts city centers and commercial real estate markets. And yet, employees still want remote and hybrid work arrangements. The desire to have work-from-home options is strong enough that many employees will take pay cuts in exchange for the flexibility.

Some of the Data

Work from Home Research noted that paid full days worked out of office was about 27%, year to date, in 2023. This represents a very slight decrease from recent months.

In February 2023:

- 60% of employees worked full-time in the office

- 28% of employees worked in a hybrid arrangement

- 12% of employees worked remotely full time

40% of employees continue to work some or all of their time outside the office.

A recent study by Robert Half found:

- 28% of job postings were advertised as remote

- 32% of employees who work in the office at least one (1) day per week would take an average 18% pay cut to work remotely full time

Data from the Federal Reserve indicates that:

- From 2020 to 2021, during the surge in remote work, productivity jumped from 108.57 per hour to 115.3 per hour

- In 2022, productivity dropped slightly as more employees returned to the office

Using the Data

Remote and hybrid work arrangements will likely continue as companies and employees work to find the right balance for the company and employees. As small business leaders, we understand that remote work is an attractive feature of job postings, and 1/3 of employees would take a pay cut or change jobs to work remotely.

We need to manage our remote and hybrid work arrangements in ways that employees see as flexible and accommodating.

In-person interactions with colleagues can improve morale and enhance company culture. It makes sense that we want most employees in the office, interacting face-to-face, at least some of the time.

Employees see most hybrid work arrangements as designed to meet the needs of the company, not employees. Employees see incentives, such as free meals and other “perks”, as gimmicks to attract employees to the office without addressing employees’ needs. We need to present hybrid work arrangements honestly in terms of company needs and priorities and those of the employees. If we provide a real balance of needs and priorities, employees will feel respected and heard. They will be more accepting of change.

The Role of Technology

We have no doubts about the power of technology to empower your employees to do their best work — in office or remotely. Many small businesses scrambled to support remote work at the onset of the pandemic. These solutions were often rushed and, as such, less efficient or effective than needed. Too many of us, however, have not stepped back to assess, revise, and improve our IT support for remote and hybrid work.

We need support and technologies in place to ensure the long-term viability of remote and hybrid work.

Employees, when working remotely, want and need the same resources and abilities as when they are working in the office. They want the same user experience regardless of where or how they work. At the same time, we need to ensure our systems and data remain secure and protected.

When assessing your IT services, make sure you have the SPARC you need:

- Security

- Performance

- Availability

- Reliability

- Cost

Leveraging cloud services, you can provide secure access to your systems and data, with a consistent user experience, at a reasonable cost.

Calls To Action

1. Read our recent eBook, Cloud Strategies for Small and Midsize Businesses. In this eBook, we: Set the stage by looking at how small and midsize businesses acquire and use technology and IT services; Explore the challenges we face moving into the cloud; and Map out four strategies for enhancing your use and expansion of cloud services.

2. Schedule time with one of our Cloud Advisors or contact us to discuss how best you can support your remote and hybrid workers. The conversation is free, without obligation, and at your convenience.

About the Author

Allen Falcon is the co-founder and CEO of Cumulus Global. Allen co-founded Cumulus Global in 2006 to offer small businesses enterprise-grade email security and compliance using emerging cloud solutions. He has led the company’s growth into a managed cloud service provider with over 1,000 customers throughout North America. Starting his first business at age 12, Allen is a serial entrepreneur. He has launched strategic IT consulting, software, and service companies. An advocate for small and midsize businesses, Allen served on the board of the former Smaller Business Association of New England, local economic development committees, and industry advisory boards.

Allen Falcon is the co-founder and CEO of Cumulus Global. Allen co-founded Cumulus Global in 2006 to offer small businesses enterprise-grade email security and compliance using emerging cloud solutions. He has led the company’s growth into a managed cloud service provider with over 1,000 customers throughout North America. Starting his first business at age 12, Allen is a serial entrepreneur. He has launched strategic IT consulting, software, and service companies. An advocate for small and midsize businesses, Allen served on the board of the former Smaller Business Association of New England, local economic development committees, and industry advisory boards.

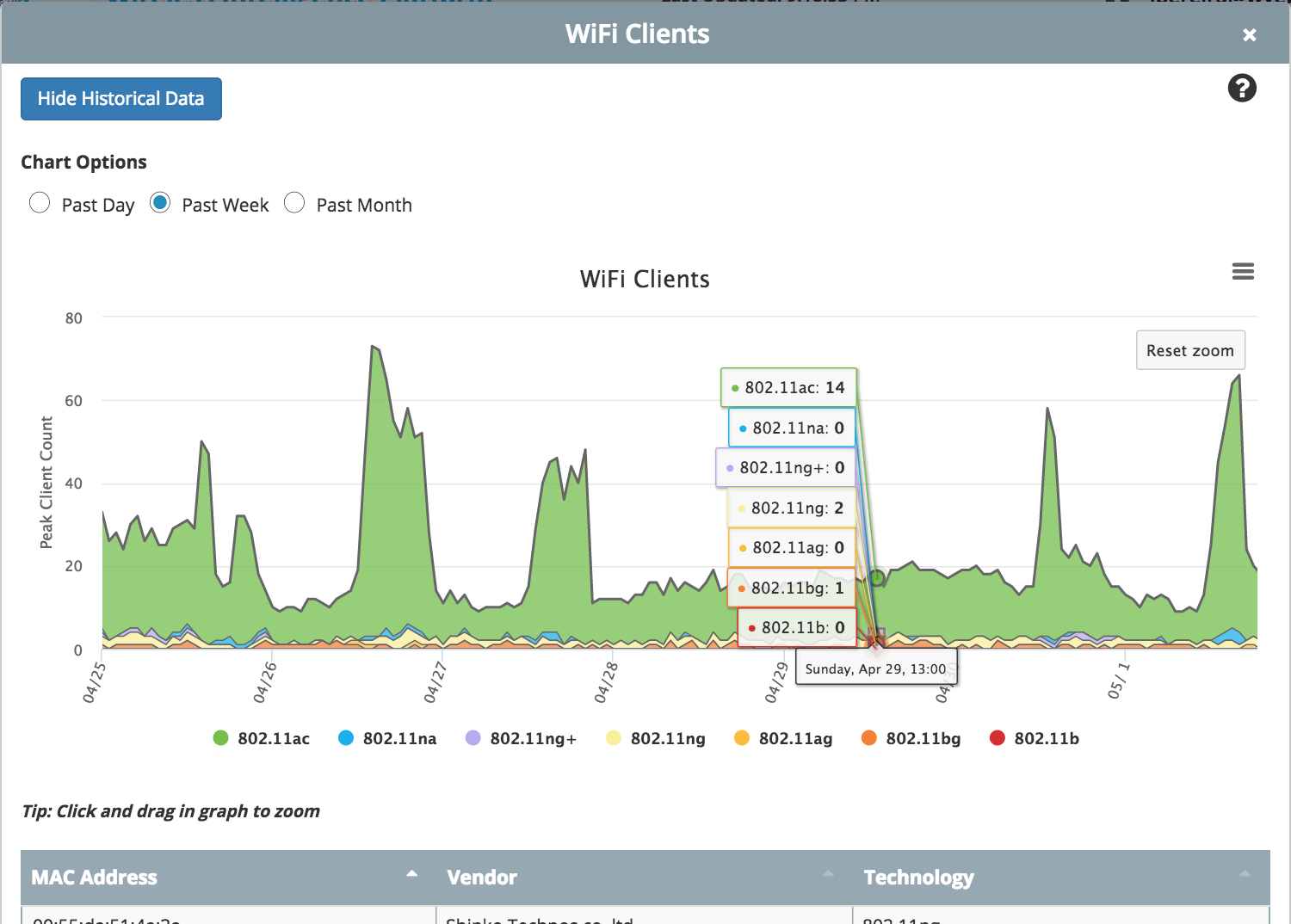

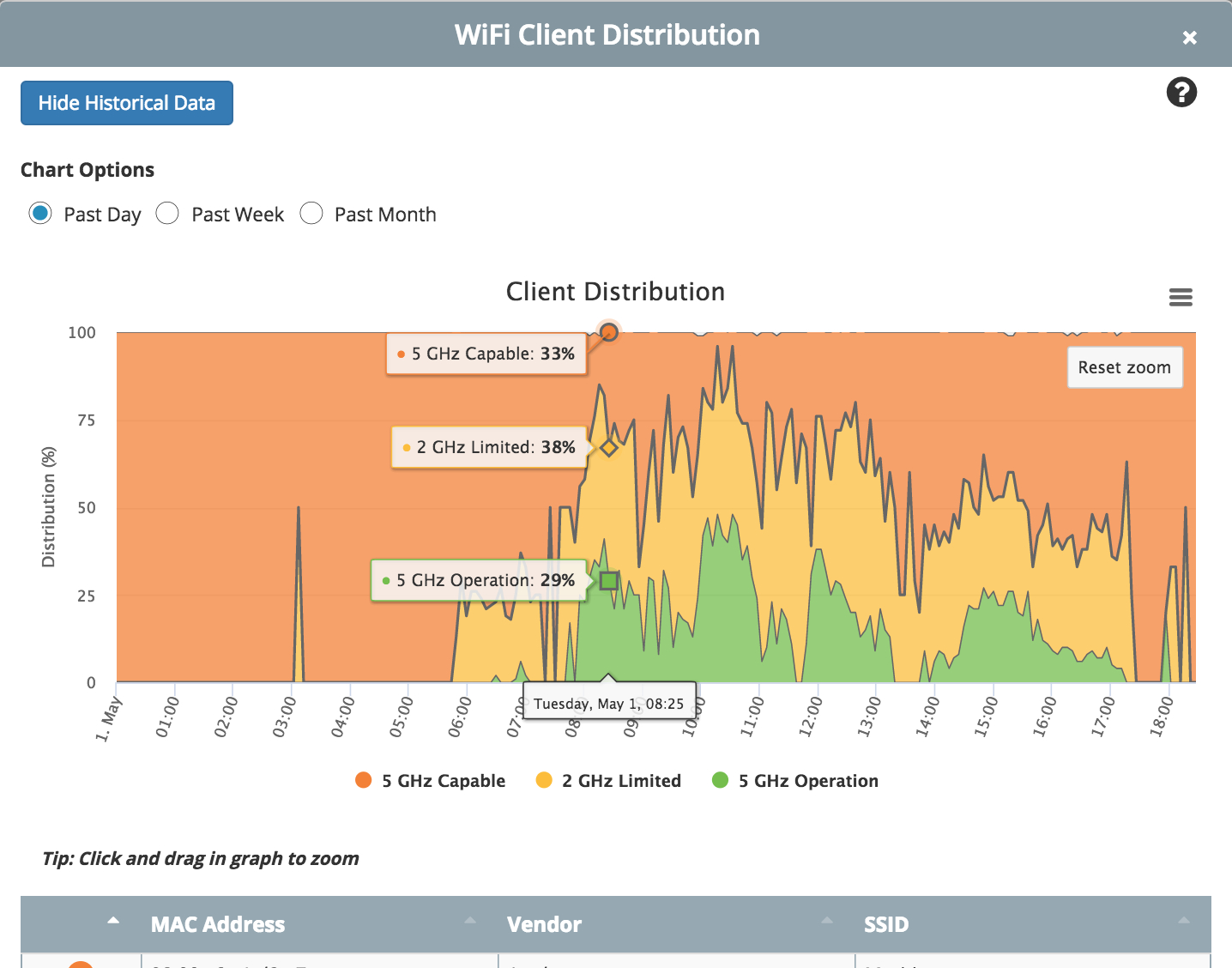

An ever increasing number of businesses are learning that WiFi is more than a convenient network connection.

An ever increasing number of businesses are learning that WiFi is more than a convenient network connection.

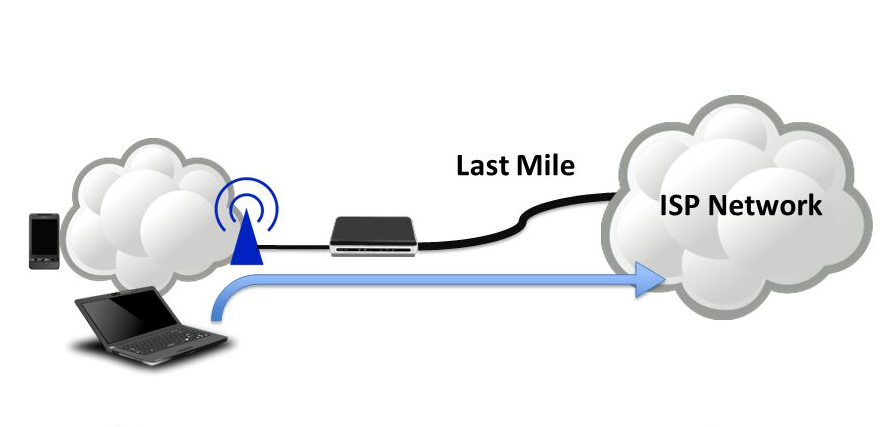

Like many organizations, your foray into

Like many organizations, your foray into  Moving from on-premise to the cloud can offer numerous benefits for businesses and organizations.

Moving from on-premise to the cloud can offer numerous benefits for businesses and organizations. This post is the third in a series addressing concerns organizations may have that prevent them from moving the cloud-based solutions.

This post is the third in a series addressing concerns organizations may have that prevent them from moving the cloud-based solutions.